Site Reliability Engineering Culture Patterns

Who should read this:

Developers new to Site Reliability Engineering (SRE) who want to understand the culture

Current SREs who are seeking to guide others like management on key aspects of SRE culture

Technical leaders who want to create an ideal culture for effective software reliability practices

Introduction

Despite its now antiquated sounding name, Site Reliability Engineering (SRE) as a discipline has strong future promise to proactively improve software reliability in production.

As software complexity continues to increase, so will the need for better and better practice of SRE.

It is undoubtedly an exciting but enigmatic field, with the how of SRE being widely open to interpretation.

The first episode of the On Call Me Maybe Podcast [reminded] me that most SREs are beginners, we're in unchartered territory.

Stephen Townshend, Developer Advocate at SquaredUp

The above quote is interesting because Stephen Townshend is far from a beginner, but his sentiment makes sense. SRE is a constantly moving target.

My experience has shown that many teams operate very differently from what was outlined in Google's 2016 book dubbed Site Reliability Engineering.

And they should. Because they are not Google.

Instead, I propose a few culture patterns that I've picked out from reading 100s of posts on the topic and talking with 10s of engineers in the discipline.

This post will explore 7 of the cultural patterns I've identified to form Site Reliability Engineering culture.

It's not perfect, but it will give a clearer picture to those refining their SRE culture.

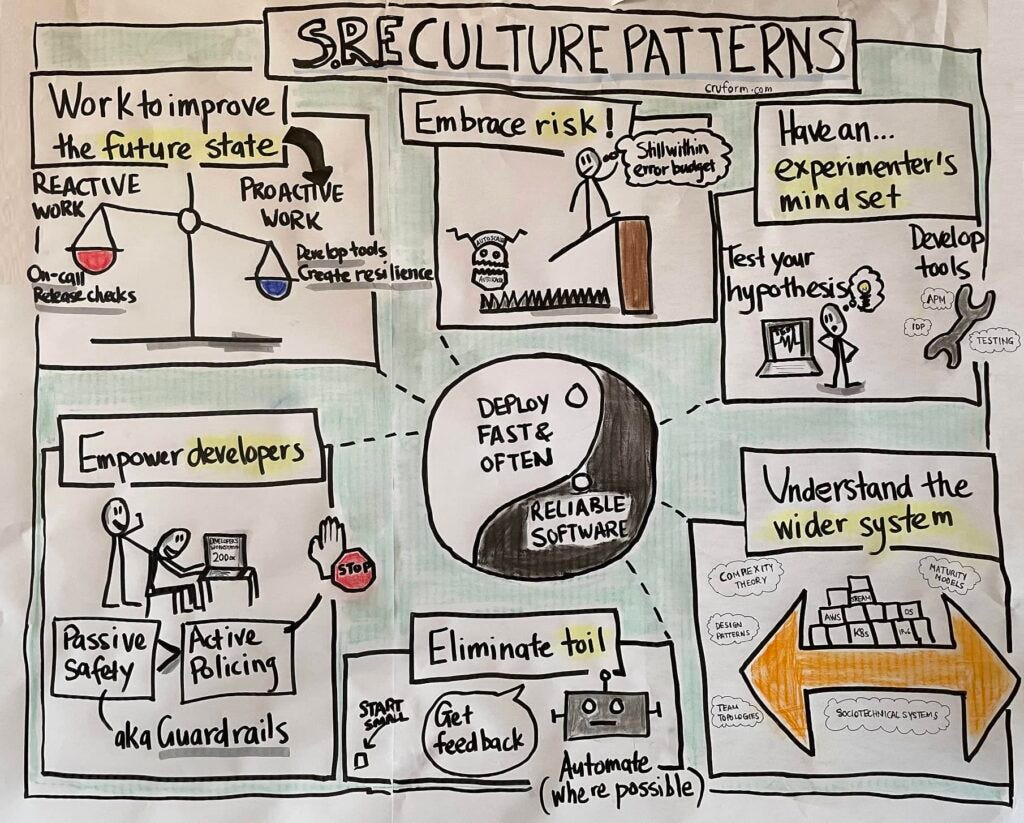

The complete visual summary is below, which I will break down and walk through in the rest of the post.

SRE Culture Pattern #1: Balance competing priorities

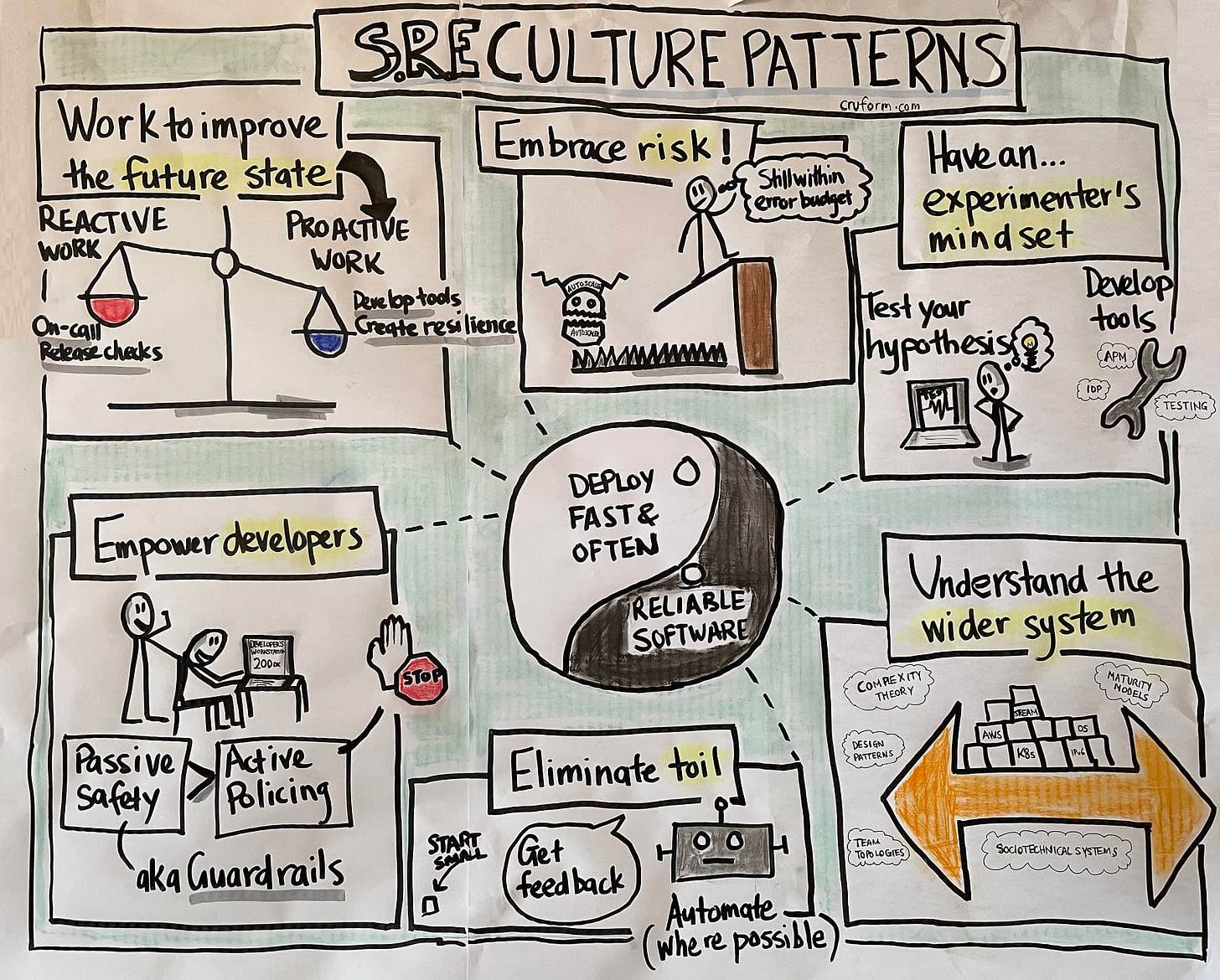

SRE can be considered a balancing act between two counteracting forces within the software organization.

On one end, there's the desire to deploy releases fast and often. On the other end is the need for reliable software with acceptable uptime.

In traditional software contexts, neither end sees the true benefit of the other.

Feature developers want to release features often to keep the product competitive, which increases the risk of software breaking in production.

Operations want reliable software. Their true north is stability, stability, stability. This is antagonistic to how product developers see the software's success.

There's a common quip that goes like this:

If it were up to sysadmins and operations, the software would get released once and that's it.

Examples of change include new code deployments and updates to infrastructure configs.

Operations people have traditionally hated change because change compromises what is perfectly running software in their minds.

The thinking goes that more change → more potential for errors → more pager alerts.

What could be more stable (and peaceful) than not making any changes to the system?

The risk from this thinking is that deployments can become over-engineered and an onerous compliance process in some situations.

We will explore this later on when I explain the need to Empower developers.

In conclusion, both ends have their merits. If you don't deploy often, your software can go stale and lose its competitiveness in the market.

At the same time, if you release without considering production stability, customers can open the page to 500 errors en masse.

SRE brings about a mindset that respects both developer and operations camps.

It supports the need of developers to ship fast and often and does the work to ensure the software will work reliably.

It's a balanced approach to deploying features into production fast but without falling apart or taking down adjacent services. The subsequent patterns allow SREs to achieve this goal with grace.

Keep reading.

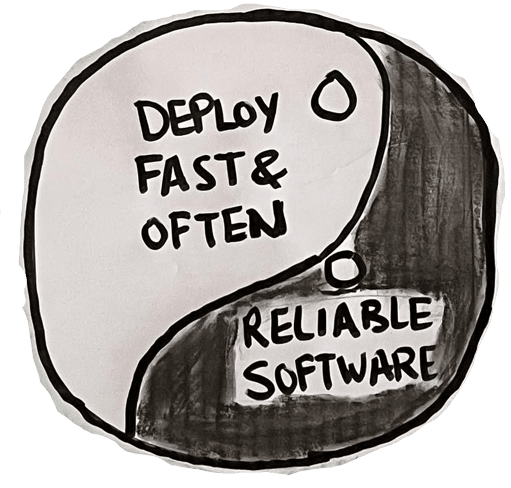

SRE Culture Pattern #2: Eliminate toil

What does toil mean?

It means doing manual, repetitive work. Let's enhance this definition further...

Toil is boring or repetitive work that does not gain you a permanent improvement.

- Jennifer Mace (Macey), Google SRE

Responding to incidents is not toil until it becomes a pattern of the same incident being responded to for the same system.

An example of eliminating toil is automating a release process versus continuing to review releases manually.

If you are following a runbook and notice that you are following Step 1,2,3,4 regularly, work out how to automate it. Write a chron job or script.

A key aspect of eliminating toil is to find out where it exists. That means getting feedback from stakeholders like developers, operations, and everyone involved in the SDLC.

There’s a saying that SREs should automate themselves out of a job. Why are they not concerned about this? Because there will ALWAYS be a new challenge.

So what does automation mean in the SRE sense?

It’s not about AI and all that funky business. It can be as simple as not clicking around or manually typing in commands every time something needs to happen.

How would it look to reduce toil?

It can be as simple as running CronJobs for scheduled tasks going all the way up to AIOps.

The key is to start small and scale up your efforts as you see improvements come to fruition.

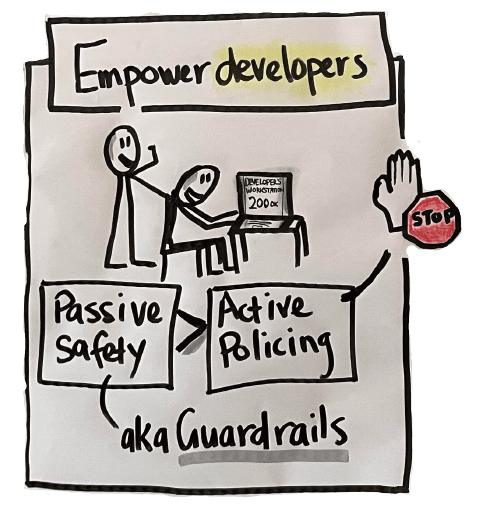

SRE Pattern #3: Empower developers

I previously mentioned that operations can put out a lot of stops in the name of stability.

In some situations, deployments turn into an onerous compliance process.

The process may employ methods to slow down or prevent changes altogether.

This can include strict release policies, lengthy compliance checklists, and approval workflows involving non-technical management.

The last issue is known in many organizations as a CAB or Change Approval Board. Word to those running CABs: developers hate them and they greatly slow software velocity.

Being cautious is a virtue, but being overcautious can be disastrous. Onerous checks and balances that slow down the deployment to production can hurt in many ways. It can:

hurt developer morale

create rifts between feature and operations teams

slow down deployments of critical new features

reduce business competitiveness due to slowed releases

Consider this as an SRE's path to achieving reliable systems without creating animosity with developers:

Offer an easy-to-follow pre-launch checklist for developers

Offer "Golden Paths" (like Spotify does) of opinionated and supported ways to package code for production

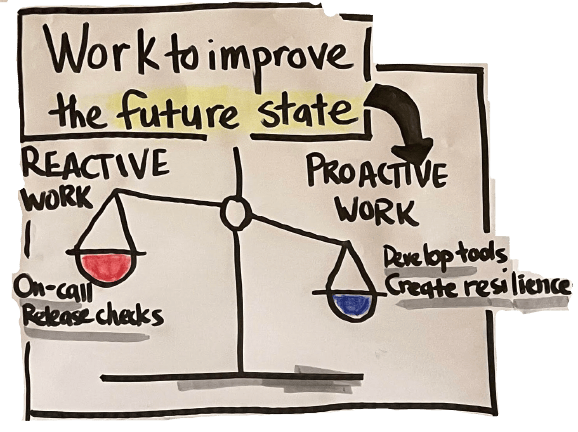

SRE Culture Pattern #4: Work to improve the future state

One of Google's original SRE rules was that Site Reliability Engineers should keep operational (toil) work below 50%.

This is one of those adages that can translate well outside of Google's sphere of influence.

"At least 50% of each SRE's time should be spent on engineering project work that will either reduce future toil or add service features."

- Eliminating toil, Site Reliability Engineering (2016)

I've noticed a regular trend on r/sre of "I want to quit SRE" posts, but also a common thread among the people posting these.

These SREs are burnt out and/or want to quit their current role to move back to developer work.

Many join with the (correct) assumption that they will be doing proactive, future-changing work.

But they spend way more than 50%, sometimes almost 100% of their time on reactive operational work like being on-call or inspecting pre-launch configurations.

Where an SRE stands out from their operational counterparts like sysadmins is that they can create tooling to make the system more resilient to future failures.

All this without having to physically be there.

So please, let your SREs do as much proactive work as they can handle.

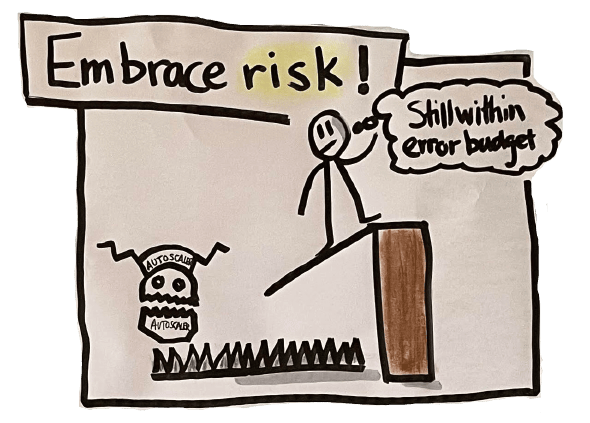

SRE Culture Pattern #5: Embrace risk

Let's wait for a moment while the IT traditionalists clear the room. Okay, now we can talk about risk... but we will aim to embrace it rather than "mitigate it".

Risk mitigation like change approval workflows often plays out to be the good guy for fast-moving and complex situations, but my experience has shown me that it often isn't.

It can bog down progress.

Site Reliability Engineering allows for handling risk with an alternative view. It does this through the lens of error budgets.

An error budget is the margin of downtime allowed in any given period. If your reliability requirement is 99.9%, you have an error budget of 0.1% or 43.83 minutes per month.

With error budgets, teams can take risks that can potentially cause downtime. But the total value of the downtime should fall below the error budget.

By the way, the scary autoscaler in the image is an inside joke for those who use Kubernetes (K8s). Many K8s users are aware of or may have experienced bizarre issues with the autoscaler. It can bring down a production system and chew up your error budget in one go.

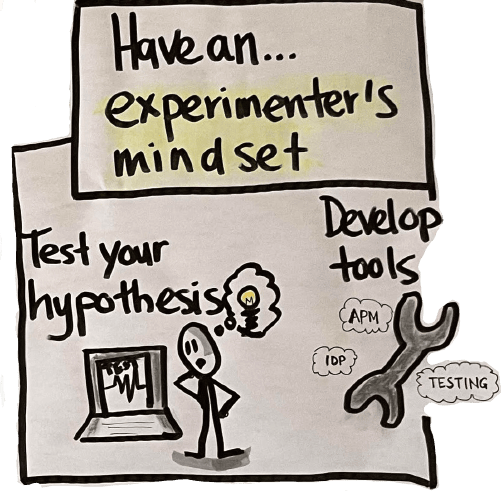

SRE Pattern #6: Have an experimenter's mindset

Remember that SREs should spend at least 50% of their time on non-reactive, non-operational work. This time should be partly dedicated to experiments.

That’s when the error budget can get burned down.

It can involve experiments like chaos engineering to test resilience in parts of the system.

To gather experiment results, Site Reliability Engineers should keep a good observability setup at a minimum. That means adequate logging, tracing, and monitoring.

In experimenter mode, SREs can create automation tools, APM tools, and more from the data they gather.

The final step in creating these tools is to test them in a controlled way and evaluate the observability results.

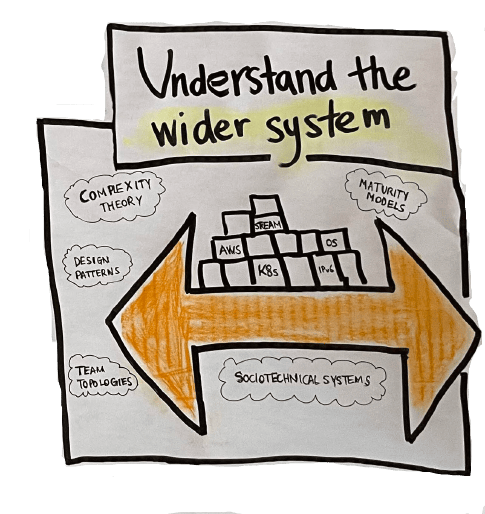

SRE Culture Pattern #7: Understand the wider system of work

When a system has become unstable or failed, several things across many facets of the system may have gone wrong.

That’s why SREs need to have their finger on the pulse of many things and do much more than incident response and running post-mortems. (JC van Winkel 2017)

As SREs increase in seniority, they get involved in architecture reviews. This calls for a broader understanding of how the underlying system has been designed.

It's also helpful to understand topics that are not technical but can help your approach to the work.

Examples of these include:

Complexity theory, in particular, the work of Sonja Blignaut and Dave Snowden (Cynefin framework)

Team Topologies by Matthew Skelton and Manuel Pais

Capability maturity model for software development, as developed by the Carnegie Mellon University

Sociotechnical systems, exploring the relationship between human social dynamics and technical systems

New design patterns in underlying systems as they evolve e.g. container patterns in Kubernetes

Parting words

Site Reliability Engineering is a proactive approach to the growing software complexity problem. Reliability is at the heart of the function.

Site Reliability Engineers are production-level engineers focused on the performance of software once it enters the real world.

An SRE's north star was originally software reliability.

“We want to keep our site up, always.” — JC Van Winkle, Site Reliability Engineer at Google Zurich.

This north star is evolving as SREs become interested in being involved in reliable, secure, and performant software. It's an interesting future for Site Reliability Engineering.